Leveraging computer vision and smart glasses to bridge the physical and digital worlds for immersive tours

Leveraging the newly released Meta Device Access Toolkit SDK

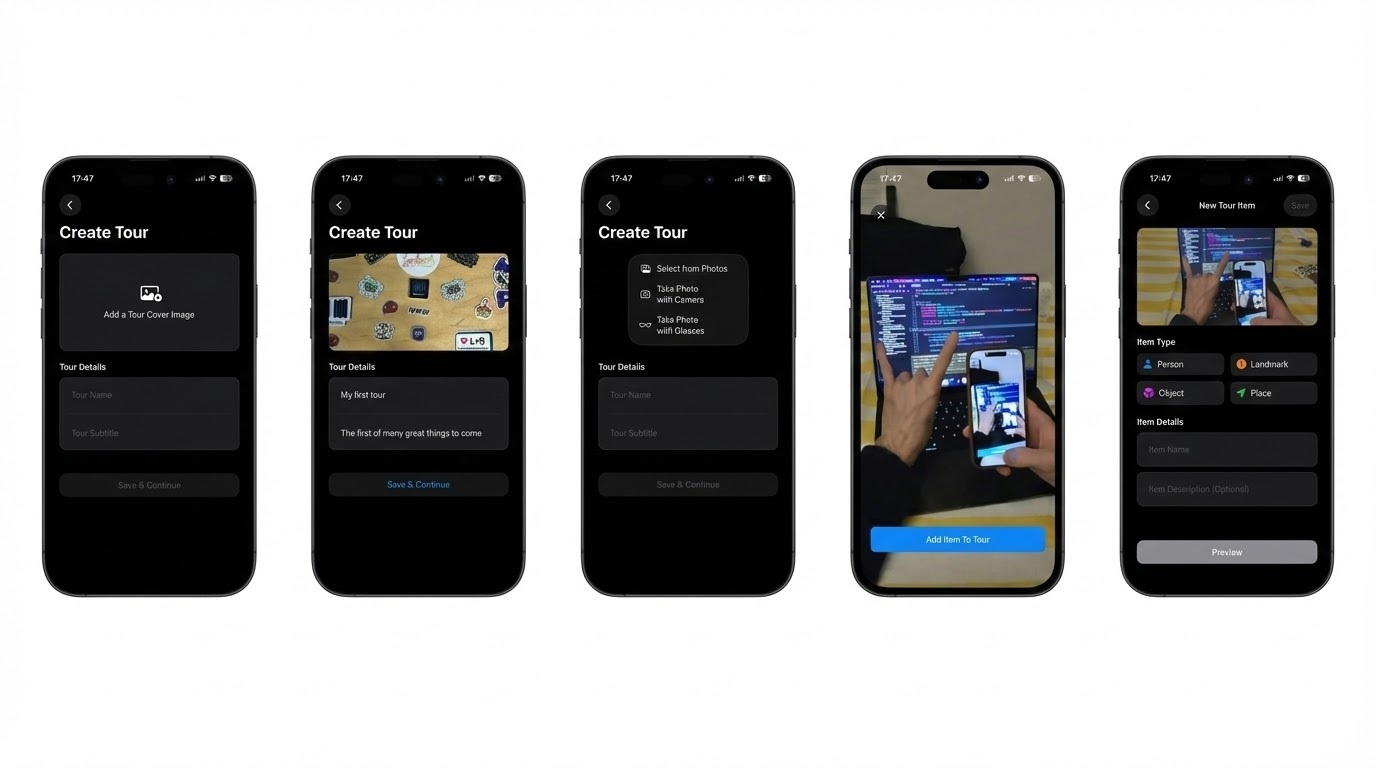

Developed a novel, "look and tag" spatial content management system for non-technical curators

Utilizing on-device edge computing to minimize latency for real-time, context-aware visual cues

Background

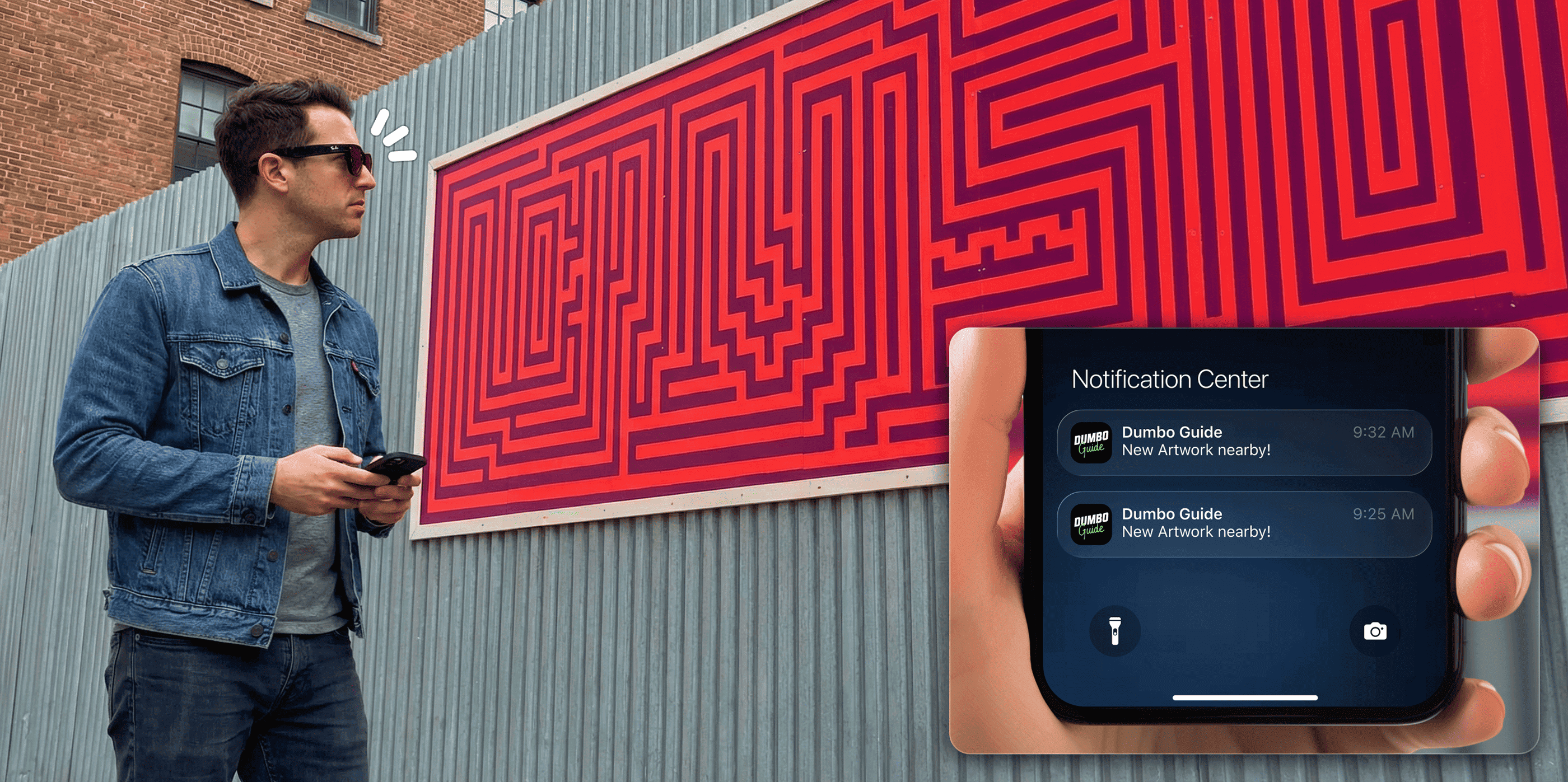

Situated along the East River waterfront, Dumbo is a vibrant Brooklyn neighborhood that serves as New York City's dynamic hub for both technology and the arts. This unique district functions as a massive open-air museum, featuring a dense concentration of galleries, tech startups, and large-scale outdoor public art set against the iconic backdrop of the Manhattan skyline. While this environment offers a rich visual history, traditional digital guides often distract visitors from the immediate surroundings.

The Challenge

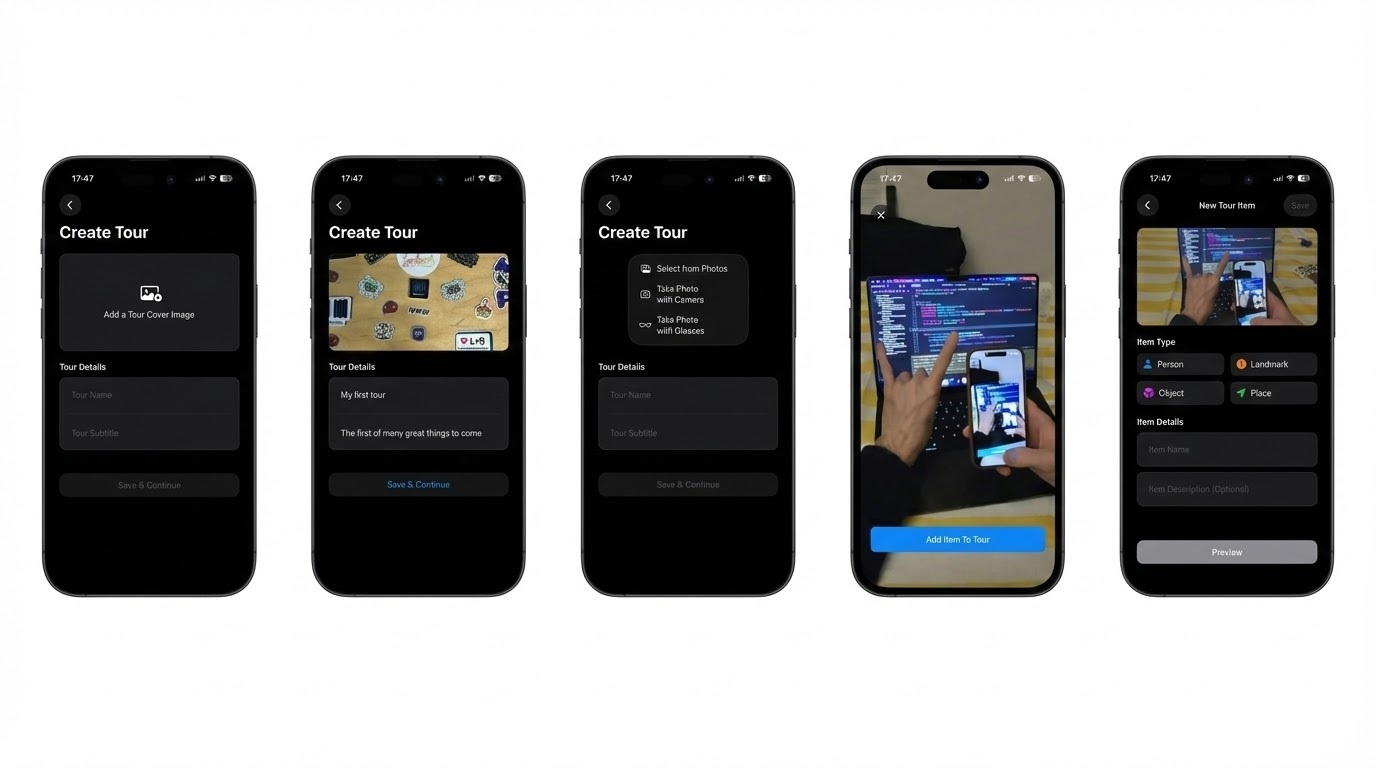

Traditional digital guides force users to constantly look down at screens, breaking their connection with the physical space. L+R aimed to utilize the capabilities of the Ray-Ban Meta smart glasses, combining them with a mobile application integrated with computer vision. The objective was to recognize objects in real-time while minimizing latency, keeping the wearer present while exploring an area known for its "cobblestone charm" and dense concentration of public art.

The Solution

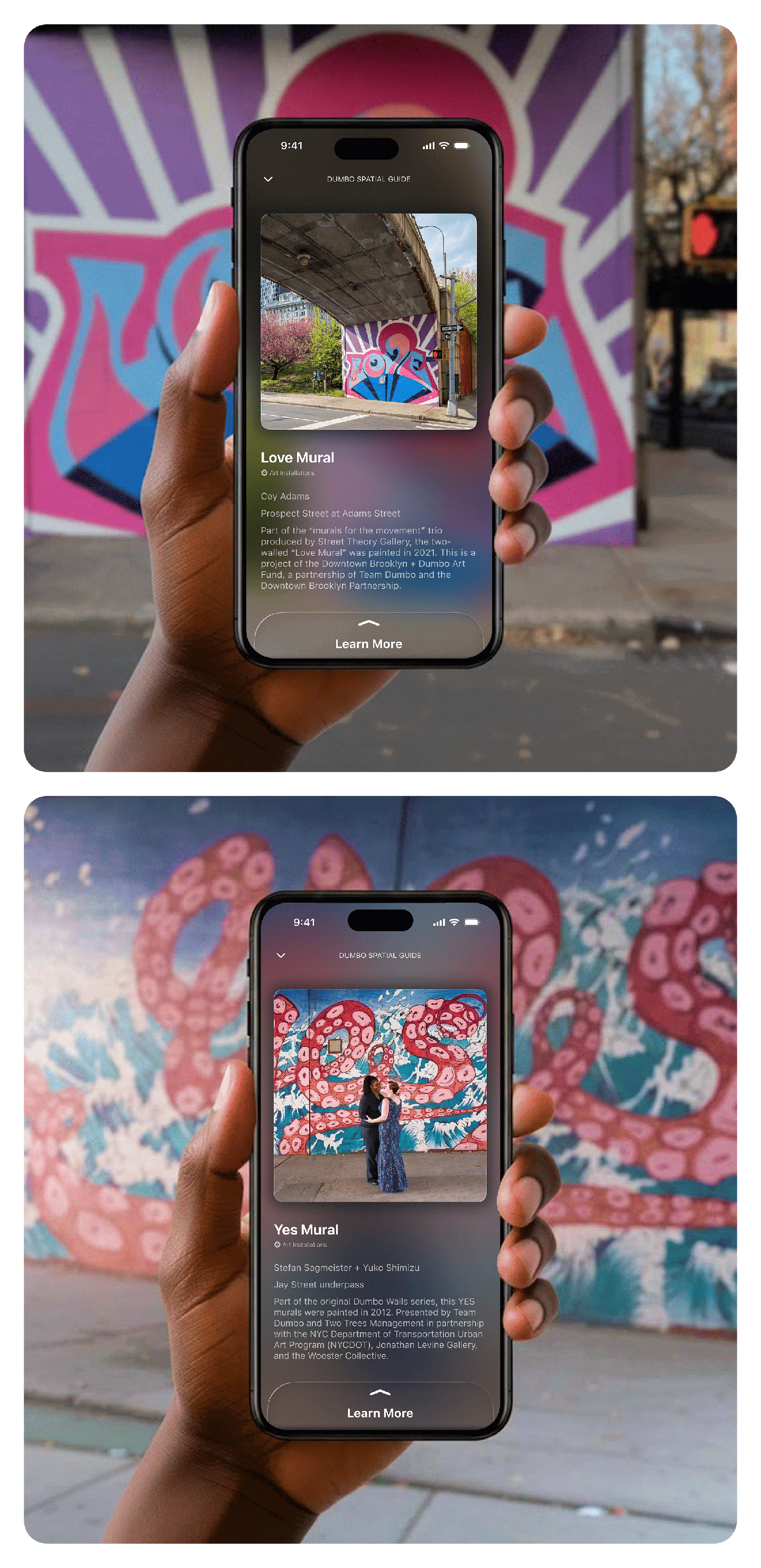

Spatial Guide

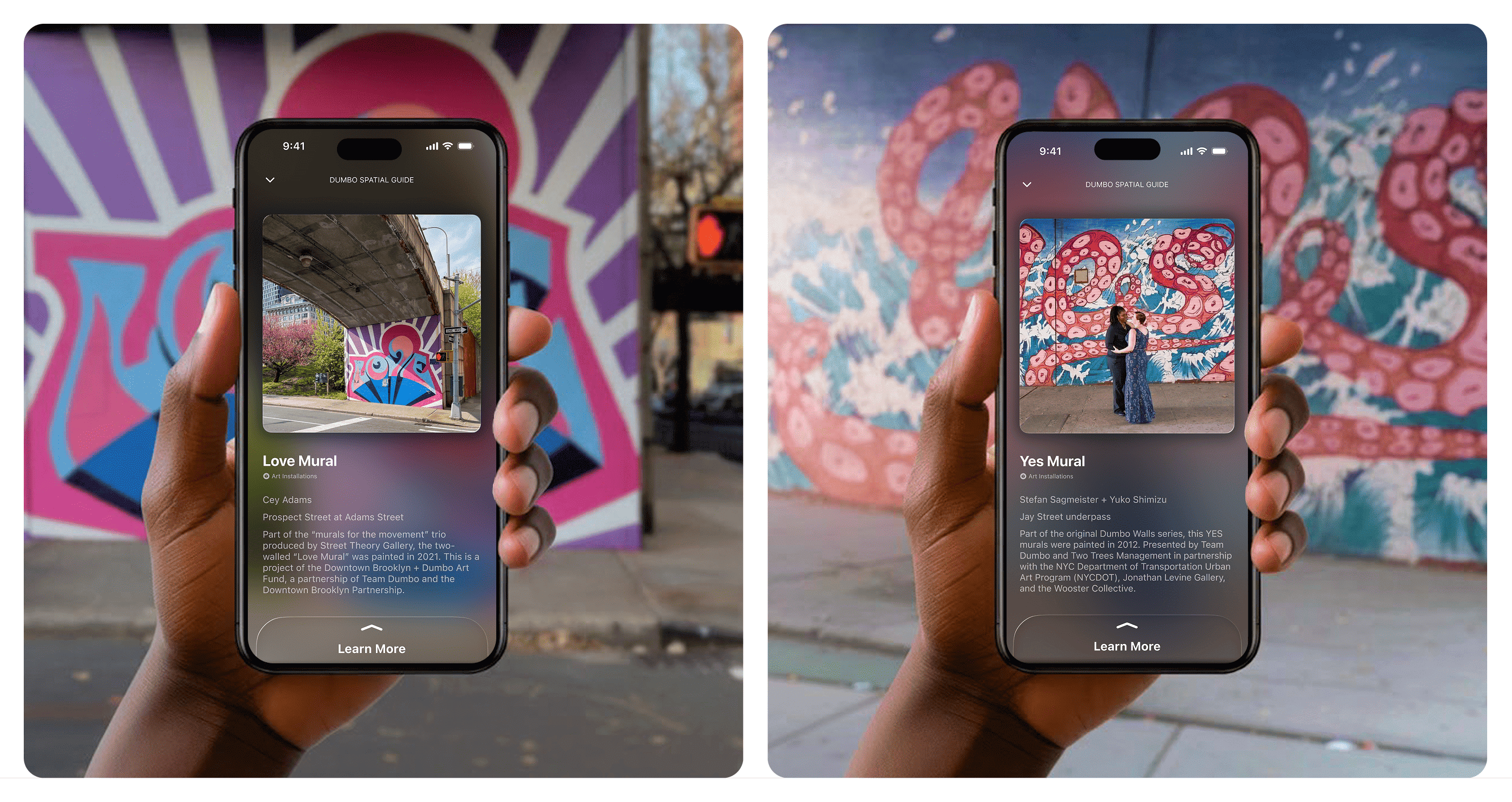

Our Engineering and Design teams collaborated to build an ecosystem where smart glasses, utilizing the Meta Device Access Toolkit, identify artifacts and trigger subtle notifications. We implemented a seamless handoff feature, allowing users to unlock deeper content like videos or 3D models on their phones only when desired.

To streamline operations, we developed a unique "look and tag" content management system. This allows organizers to train the computer vision model simply by wearing the glasses, looking at an object, and verbally tagging it. This removed the need for complex backend coding, empowering curators to digitize the neighborhood's 8 art walls and projection projects effortlessly.

The Impact

This platform democratizes spatial computing by allowing curators to digitize physical spaces with ease. By keeping technology peripheral until needed, the experience honors the physical environment, demonstrating that AI and hardware can heighten our connection to the real world rather than distracting from it.

Features

Real-Time Object Recognition

Our Engineering team leveraged the Meta SDK to process video input directly on the device, utilizing edge computing to minimize latency. By training custom computer vision models, the application identifies specific artifacts or landmarks within the user's field of view, triggering immediate, context-aware audio or visual cues without requiring cloud-based processing for every interaction.

Seamless Device Handoff

Recognizing that smart glasses have optical display limitations for dense media, our Strategy and Design departments developed a "handoff" protocol. When a user engages with an object, a synchronized push notification is sent to their paired mobile device. This allows for a deeper dive into high-fidelity content—such as 3D object manipulation or historical video archives—ensuring the hardware is used for its strengths rather than forcing a poor viewing experience.

In-Situ Model Training

To support stakeholders and venue operators, we built a proprietary content management system that utilizes the glasses for data entry. Organizers can "look and tag" objects in the physical space, using voice commands to label items and train the recognition model in real-time. This reduces the technical barrier to entry, allowing non-technical staff to update tours and manage digital assets directly from the street or showroom floor.